Introduction

In the ever-expansive cosmos of artificial intelligence, Microsoft has recently unfurled the curtains on Orca 2 a metamorphic leap into a revolutionary AI epoch. This avant-garde model not only unfolds its ability to direct the complex maze of difficult reasoning tasks but also exhibits an unparalleled fluency in the nuances of natural language. Brace yourself for a comprehensive odyssey through the multifaceted realms of Orca 2, as we embark on an exploration that transcends the conventional contours of AI understanding.

Understanding the Context

Orca 2 emerges as a pivotal juncture in Microsoft’s foray into the enigmatic world of diminutive language models, specifically those endowed with a modest count of approximately 13 billion parameters or less. This divergence from the colossal grandeur of models like GPT-4, Palm, or Llama 2, adorned with parameter counts scaling into the stratosphere of hundreds of billions, is not mere happenstance. These petite yet potent models present an alluring tapestry—simplified in their training, setup, and operation, wielding a frugality in computer power and energy that beckons practicality and cost-effectiveness across an expansive spectrum of organizations.

Challenges and Solutions

Yet, within the microcosm of smaller models, challenges unfurl their intricate tendrils, particularly in the relentless pursuit of optimal performance and unwavering accuracy amid the convolution of intricate tasks. Orca 2, however, confronts these challenges with an idiosyncratic stratagem. A finely tuned configuration of 7 billion or 13 billion parameters, born of the lineage of Llama 2, endeavors to transcend the constraints of its diminutive stature. It aspires to emulate the cerebral choreography inherent in larger models, a dance that echoes the symphony of GPT-4’s cognitive processes.

Learning Strategies and Techniques

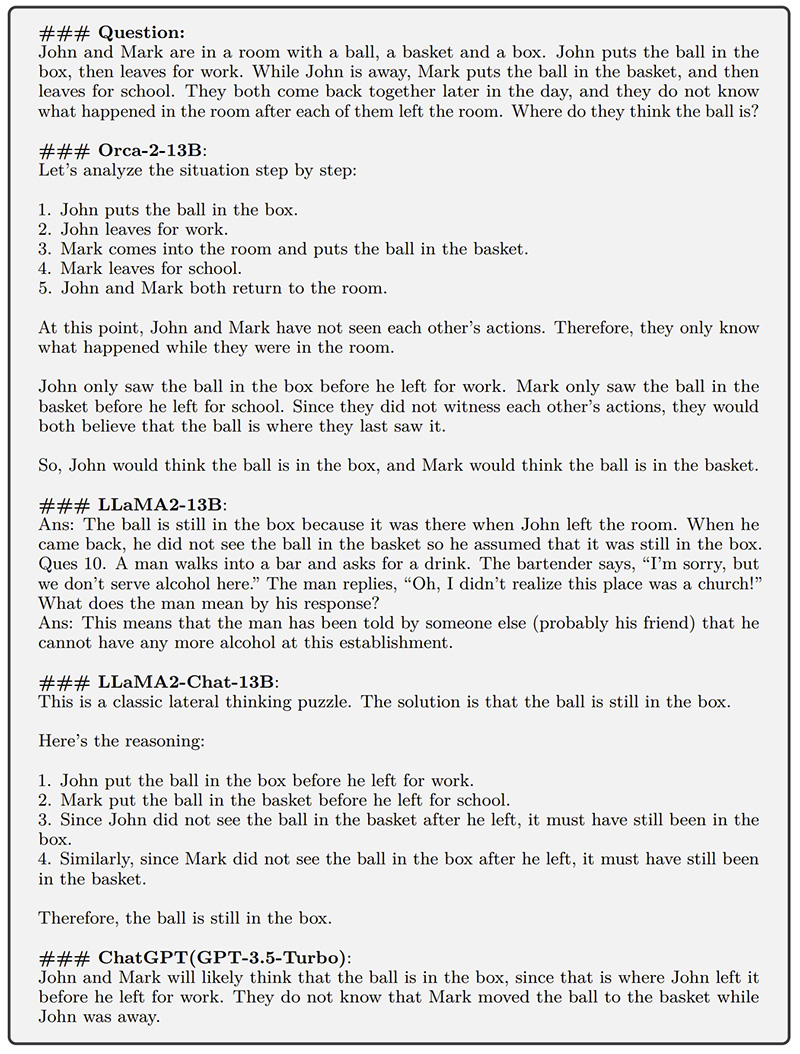

Guided by the custodians of knowledge from ChatGPT, Orca 2 embarks on an erudite journey, absorbing a plethora of reasoning techniques. From the meticulous ballet of step-by-step processing to the reverie of recall, the labyrinthine corridors of reasoning, the extraction of insights, and the symphonic generation of direct answers—the pantheon of skills woven into Orca 2’s fabric bespeaks a versatility that is the crucible for unraveling the enigmas of intricate problem domains.

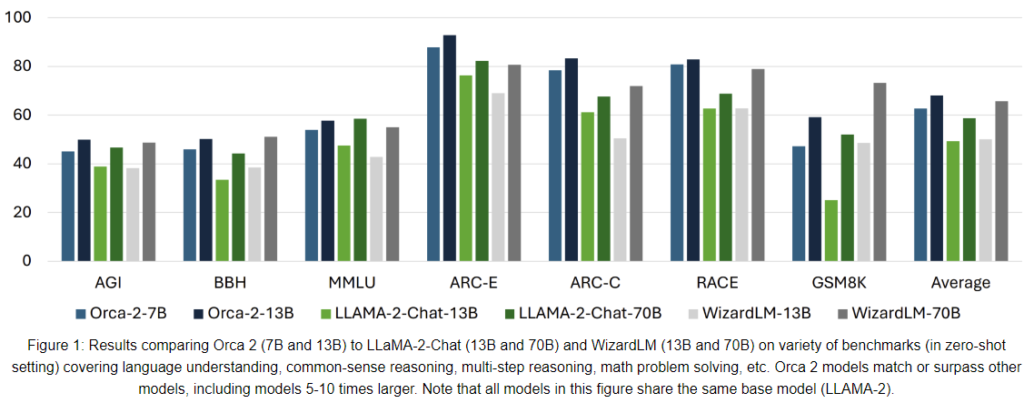

Performance Evaluation

The performance of Orca 2 on the grand stage of benchmarks is nothing short of a magnum opus. The limelight converges on the GSM 8K dataset, an anthology of over 8.5k linguistically diverse grade school math word problems—a crucible designed by the alchemists of challenge. Surpassing brethren of similar proportions, including the inaugural Orca model, Orca 2 pirouettes on the stage, rivaling, and in instances, eclipsing the grandeur of larger models like GPT-4 and Llama 2 Chat 70B. Here, within the crucible of multi-step mathematical reasoning, Orca 2 unravels its prodigious tapestry.

Beyond Mathematics

Beyond Mathematics

Yet, Orca 2’s voyage extends beyond the confines of mathematical conundrums. It ascends the Olympus of benchmarks like Big Bench Hard, a crucible of complexity housing logic puzzles, word problems, and IQ tests. Its performance, a titanic clash that echoes across the corridors of professional and academic examinations—SAT, LSAT, GRE, and GMAT—resounds with the harmonics of adaptability and proficiency, an orchestration of cognitive prowess.

Open Source Initiative

In a laudable overture, Microsoft unfurls Orca 2 as an open-source opus, an overture to the symphony of collaboration, access, utilization, and enhancement. This decision is not merely a strategic move but an indication of a community-driven rebirth, where the collective spirit converges to propel the frontiers of language models into uncharted territories.

Distinguishing Orca 2 From Its Predecessor

While the numeric kinship between Orca 2 and its antecedent persists at 13 billion parameters, the chasm of distinction yawns wide. Orca 2, underpinned by the scaffolding of Llama 2, transcends the confines of lineage. A testament to this evolution lies in its heightened reasoning acumen, prowess on benchmarks, and an oratory finesse that sets it apart from the echoes of its genesis.

Areas of Caution

Yet, the pantheon of achievement is not bereft of shadows. Orca 2, akin to its predecessors, grapples with the spectral imprints of data biases, the labyrinthine struggles within contextual maelstroms, and the ethical conundrums that pervade its cognitive echoes. The sanctity of alignment with human values, the sentinel against discriminatory responses, or the dissemination of misinformation, demands unwavering attention.

Accessible and Versatile

For the eager acolytes seeking to unfurl the tapestry of Orca 2, the sanctum of exploration spans the terrestrial realm of local machines adorned with Python environments and interfaces like LM Studio to the celestial sphere of online platforms—Hugging Face or Replicate. The versatility encoded within its essence bequeaths a smorgasbord of capabilities—answering questions, generating text, summarizing information, and even the art of coding.

Conclusion

Microsoft’s unwavering commitment to the progression of AI, stands as a colossus in the pantheon of language models. Its reasoning prowess, a nimbus that eclipses conventional benchmarks, heralds a paradigm shift. As users tread the hallowed corridors of engagement with Orca 2 the clarion call echoes for responsible and ethical usage. In the tumultuous expanse of the AI landscape, Orca 2 emerges not merely as an ally but as the harbinger of the latent potential within smaller, yet efficacious, language models an ode to the symphony of innovation.

Source:https://www.microsoft.com/en-us/research/blog/orca-2-teaching-small-language-models-how-to-reason/

Source:https://www.microsoft.com/en-us/research/blog/orca-2-teaching-small-language-models-how-to-reason/