Nowadays big question how to make open-source AI models safe? Meta’s new project Purple Llama got you covered, and its aim is to ensure that the useful but potentially harmful, though conscious, computer code can be used in a controlled way so people will benefit from them without being hurt because there are plenty of things they could do wrong; they might make bad content or even fake news. If you don’t watch out,.

The two main components of the purple lama now are

- Llama guard

- Cyersec Eval

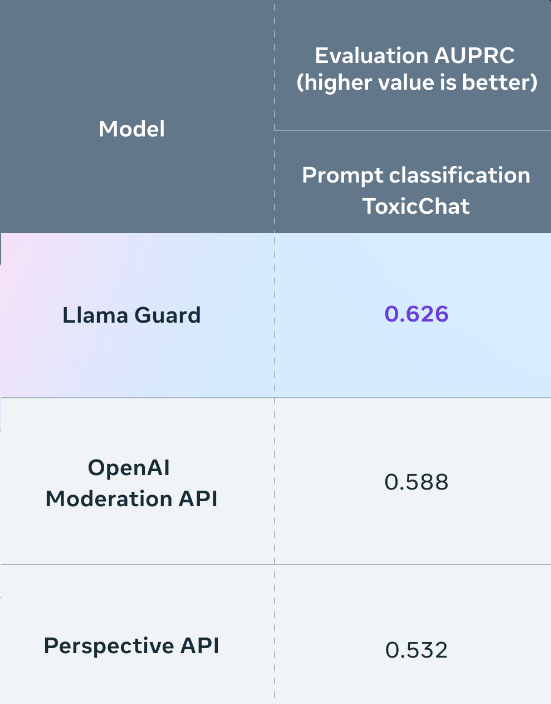

Llama Guard

Llama Guard

can enhance existing API security. It is better at discovering harmful content made by large text models than human beings because it will find wrong or dangerous speech such as discriminatory and hateful terms and fake news. This means that if humans want to make this type of model generative (i.e., capable of creating things on its own), then they must take this into accoun.

Cybersec Eval

Cybersec Eval

The tools for verifying if large text models are safe from cyber threats Cybersec Eval has four parts.

- Tests for unsafe coding.

- Test for attack compliance.

- Input output safety.

- Threat info

Tests a model suggests bad code, and how well it follows Cyber-attack tactics ensure that the models don’t suggest dangerous code or help with cyber-attacks. Thus, these output tests are necessary to guarantee security and make life easier for researchers studying text modeling in this area, as well as developers meeting industry standards.

Purple Llama doesn’t just help developers create AI that is safe, ethical, and protects human rights; it also helps them test their AI, especially the Internet-famous large language models, for flaws. With tools like CyberSecure, they can check with ease if the AI has generated any unsafe code or penetrated client privacy policies, not only to prevent other people from getting As a platform to understand and trust AI-generated content, Purple Llama provides them with tools they could use to verify if the text or an image is misleading so as not to do harm on purpose by giving you wrong information. Six months ago, there was also a purple llama for people working in security about how their research could be used later. Someone did indeed post some of this work online, but his comment.

Purple Llama doesn’t just help developers create AI that is safe, ethical, and protects human rights; it also helps them test their AI, especially the Internet-famous large language models, for flaws. With tools like CyberSecure, they can check with ease if the AI has generated any unsafe code or penetrated client privacy policies, not only to prevent other people from getting As a platform to understand and trust AI-generated content, Purple Llama provides them with tools they could use to verify if the text or an image is misleading so as not to do harm on purpose by giving you wrong information. Six months ago, there was also a purple llama for people working in security about how their research could be used later. Someone did indeed post some of this work online, but his comment.