Recently, a tweet by Steven Heidel (a core member of the Open AI team) in this rapidly developing field caused great debate. The tweet “brace yourselves, agi is coming” was actually in response to Jan Leike’s disclosure concerning OpenAI Preparedness Framework new strategy for dealing with the threats posed by advanced AIs.

OpenAI’s Preparedness Framework

OpenAI’s Preparedness Framework

The innovation that underlies OpenAI’s focus concerns a “Preparedness Framework,” which may be conceived of as an operational game plan for ensuring the safety and authority-appropriate use of cutting-edge crowning glory. With the advancement of AI, how to avoid unexpected side effects or even harm to individuals is a problem that must be carefully watched. This framework provides the mechanism for maintaining control and eliminating potential problems.

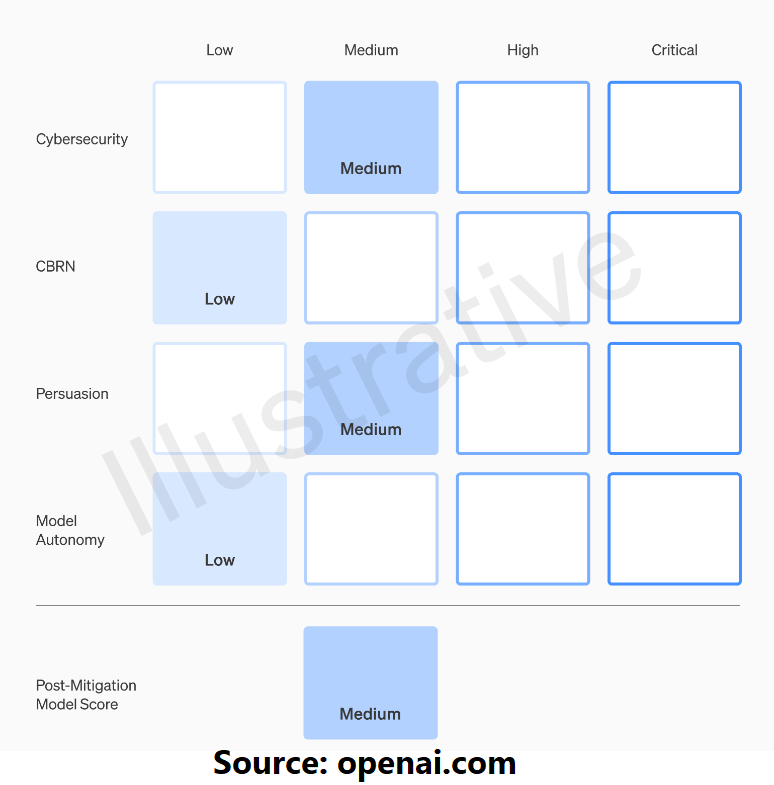

Risk Assessment: A Crucial Component

Evaluating the risks posed by various types of artificial intelligence systems will also be a major part of that framework. He says that the goal of openAI is to create a scorecard, like gauging risk. The scorecard will also be used to judge how much danger an AI model poses. If a system is deemed to be too dangerous, instead of not using it at all, OpenAI may alter the product for safety reasons.

Identifying Potential Risks

Identifying Potential Risks

Let’s delve into some of the key risk areas that Open AI is addressing within its Preparedness Framework:

Cyber security

The specter of criminal computer access using artificial intelligence is also a major concern. OpenAI also aims to prevent its artificial intelligence from falling into the hands of hackers or governments and being turned against the public.

CBRN Threats

There is no denying that addressing CBRN (chemical, biological, radiological, and nuclear threats) has become important. The company is paying close attention to preventing its AI from becoming a tool for producing bad things, such as deadly biological weapons.

AI persuasion

It’s frightening to think of people being manipulated or pressured, including in instances such as elections. OpenAI actively seeks to avoid having its products used in this way to manipulate public opinion through AI.

Autonomy in AI Models

Self-taught models raise concerns about the development of AI systems with self-improving abilities. OpenAI points out those systems need to be over supervised and prevented from completely operating independently.

GPT-5: The Next Frontier

In addition to these activities, Open AI is also preparing for GPT-5, the next stage in cutting-edge development. Power and safety No small feat; the construction of the GPT-5 is indeed a craft. The Preparedness Framework is a work in progress and will develop as Open AI gains further insights into the problem.

Why do they hold such a significant position in the tech landscape?

The declaration of responsibility for developing AI responsibly is also an indicator that OpenAI has become consciously aware. The stronger and smarter AI gets, the more it requires a balance between technological development on the one hand and society’s welfare on the other.

Conclusion: Striking the Right Balance

If machine capabilities are growing rapidly, why does OpenAI go to all the trouble of developing their own Special Preparedness Framework? With the rapid development of technology, ethical questions are becoming all-important. I believe OpenAI is taking the lead here, actively confronting all potential risks and shaping a future of positive use where no person or thing experiences harm.